Have you ever found yourself wondering about the clever computer programs that seem to understand what you say or even write new things all on their own? It's a bit like magic, isn't it? Well, in the quick-moving world of artificial intelligence, two big ideas often come up: Natural Language Processing, or NLP, and Generative AI. People sometimes use these terms as if they mean the very same thing, and that can get a little confusing, you know?

It's a common thought, is that, are these two really just different names for the same kind of smart computer trick? Or, perhaps, is one a part of the other? Understanding how they fit together, or don't, can really help make sense of the amazing things computers are doing with language these days, so.

This discussion aims to clear up any muddle around these concepts, offering a simple look at what each one truly means and how they work side by side. We'll explore their unique features and, perhaps more importantly, how they team up to create some of the most impressive language-based AI tools we see around us, too it's almost.

Table of Contents

- What is Natural Language Processing (NLP)?

- What is Generative AI?

- So, Is NLP Generative AI? Unpacking the Connection

- Beyond the Basics: Real-World Impact

- Frequently Asked Questions

- Final Thoughts

What is Natural Language Processing (NLP)?

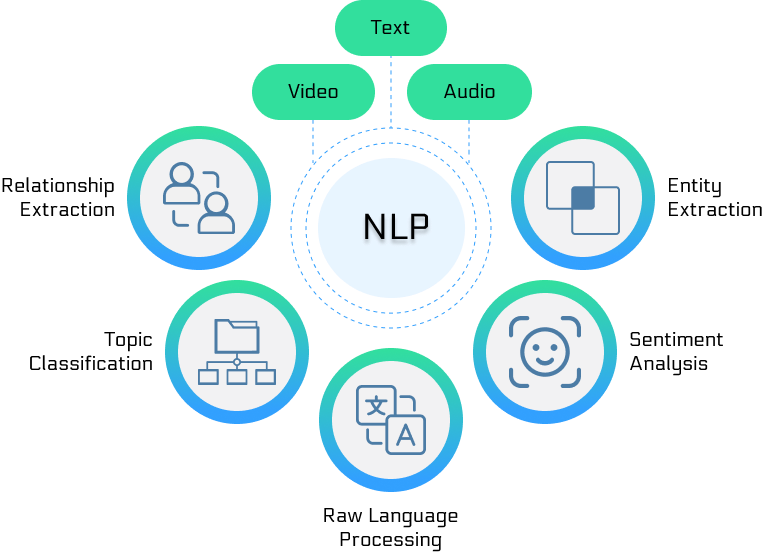

Natural Language Processing, or NLP, is a branch of artificial intelligence. It helps computers work with human language, you know, the way we speak and write. Think of it as teaching a computer to hear, read, and make sense of words, just like people do, basically.

For a long time, computers have had trouble with the nuances of human talk. Our language has so many different meanings and ways to put words together, so. NLP tries to bridge that gap, making it possible for machines to process and understand vast amounts of text or speech data, in a way.

It’s about taking human language, which is often messy and full of quirks, and turning it into something a computer can actually use. This involves a lot of steps, from breaking down sentences to figuring out the feelings behind the words, apparently.

How NLP Helps Computers Understand Us

How does a computer begin to understand a phrase like "I'm feeling blue"? A person knows that means sadness, not a color, right? NLP systems learn to pick up on these sorts of things, that.

It starts with breaking down sentences into smaller bits, like individual words or phrases. Then, it might look at the grammar, the way words are put together, and even the context of the conversation, to be honest.

These systems use various methods to grasp meaning. They might identify parts of speech, like nouns and verbs, or recognize named things, such as people, places, or organizations. This foundational work is really important for any deeper processing, too it's almost.

For example, when you ask a voice assistant a question, NLP is the technology working behind the scenes. It takes your spoken words, turns them into text, and then tries to figure out what you're asking, so.

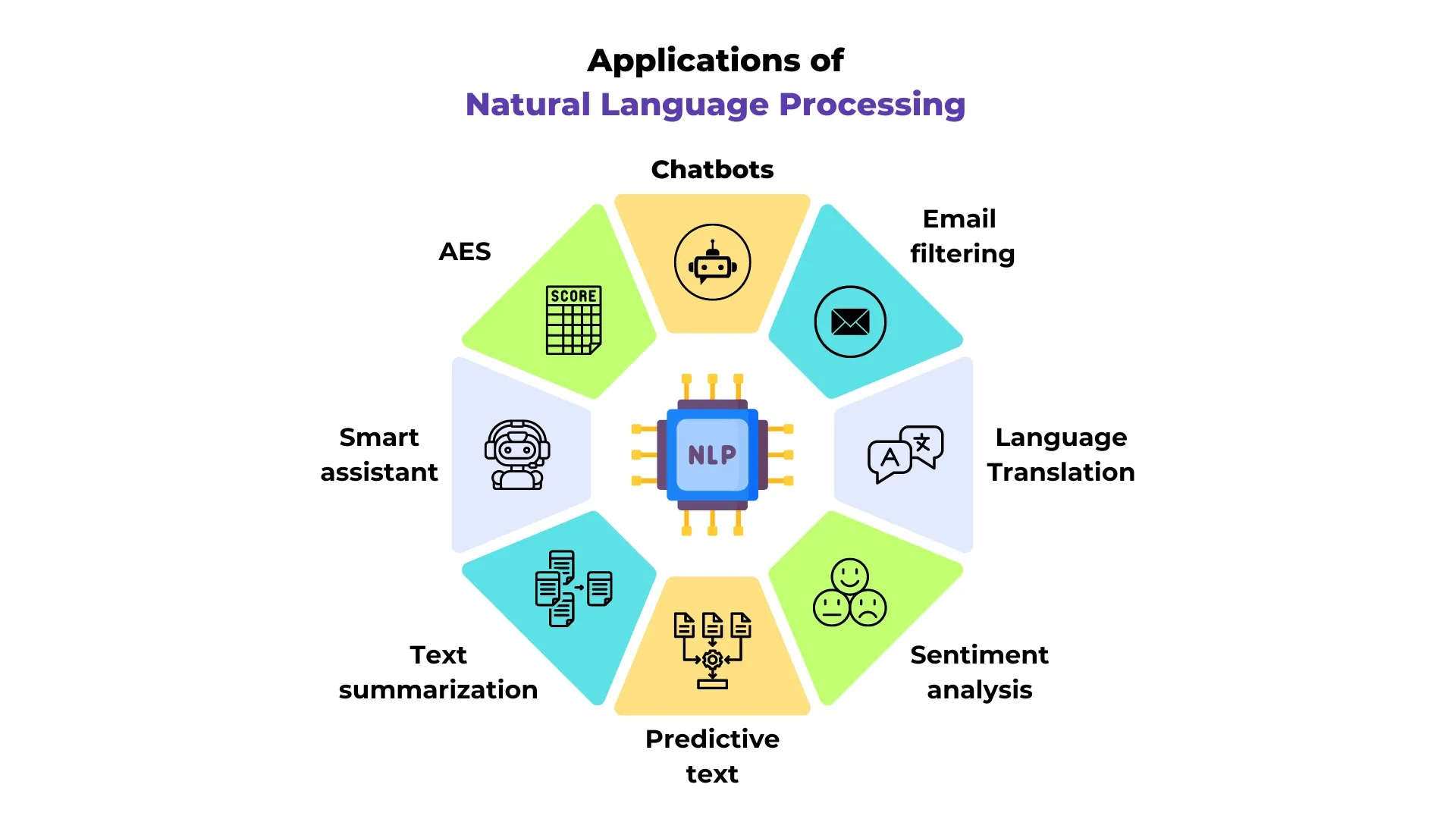

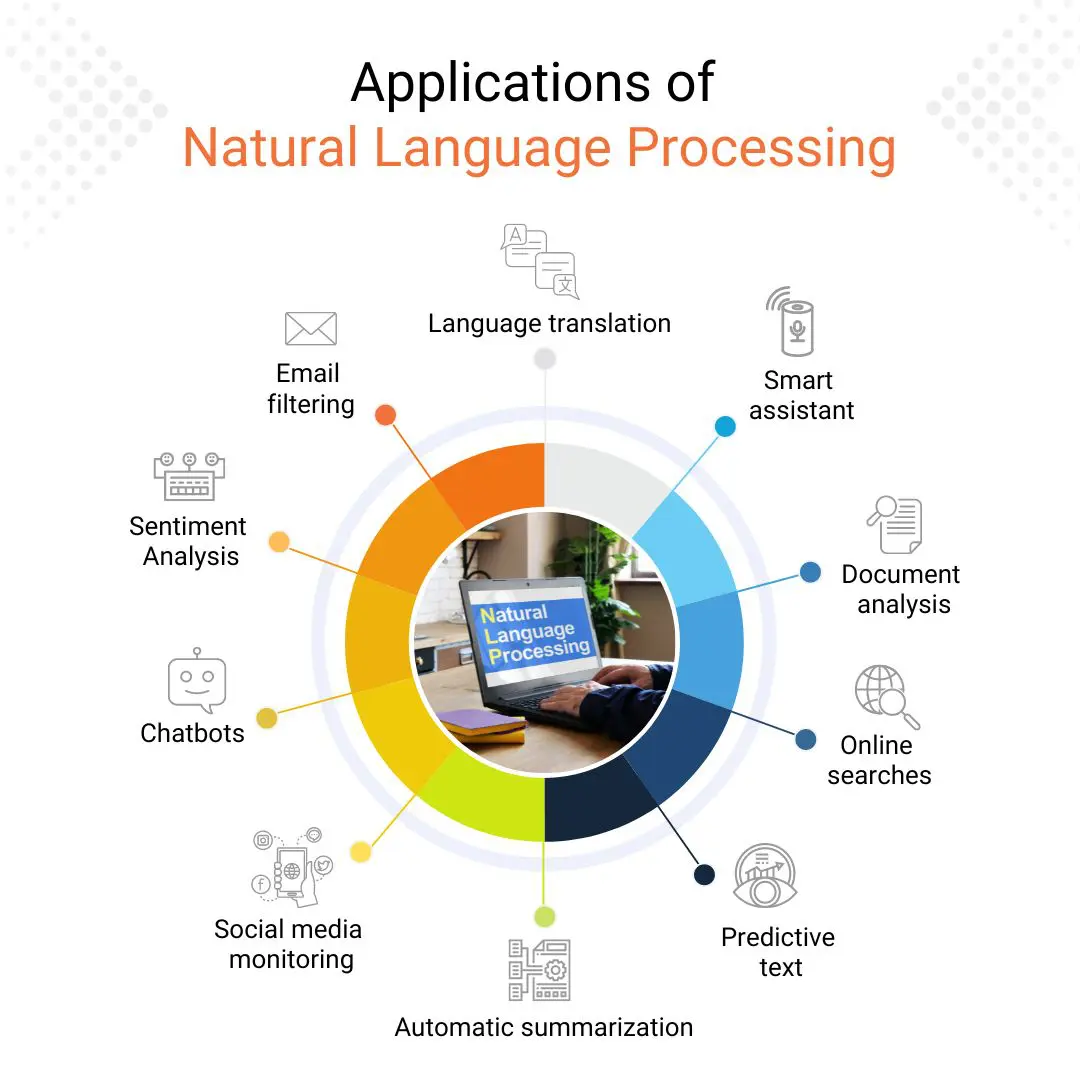

Key Areas of NLP

There are several different parts to NLP, each with its own job. One big area is "sentiment analysis," which looks at text to figure out the emotion or feeling expressed, like if someone is happy, sad, or angry, you know.

Another area is "machine translation." This is what allows tools to change text from one human language to another, say from English to Spanish. It's quite a complex task, as a matter of fact, because it's not just about swapping words, but also keeping the meaning and flow.

"Speech recognition" is also a major part, turning spoken words into written text. This is what makes voice commands possible on your phone or smart speaker, really. Then there's "text summarization," which takes a long piece of writing and boils it down to the main points, making it shorter and easier to read, you know.

So, NLP is a broad field. It covers many ways computers can work with and make sense of human language. It's about taking in information and figuring out what it means, rather than creating something new, in a way.

What is Generative AI?

Generative AI, on the other hand, is a different kind of smart computer program. Its main purpose is to create something new, something that didn't exist before, you know. This could be text, pictures, music, or even computer code, so.

Unlike traditional AI that might just sort or analyze data, generative AI actually produces original content. It learns patterns from huge amounts of existing data and then uses those learned patterns to generate fresh, unique outputs, more or less.

Think of it like an artist who has studied many paintings. They don't just recognize art; they can create their own original pieces inspired by what they've seen. That's what generative AI aims to do, too it's almost, but with data.

This field has seen some very quick advancements recently, especially with the rise of new models. These models are able to produce things that look or sound incredibly real, often to the point where it's hard to tell if a human or a machine made them, apparently.

The "Creation" Aspect of Generative AI

The key idea with generative AI is its ability to "generate." It doesn't just pull information from a database; it constructs new data points. This creation process involves complex mathematical models and a lot of training data, you know.

For instance, if you give a generative AI model a prompt like "write a short story about a talking cat," it won't search for an existing story. Instead, it will come up with a brand new one, using the patterns it learned from countless other stories it was trained on, to be honest.

This means it can be incredibly flexible. It can adapt to many different kinds of requests and produce a wide range of outputs. It's not limited to a set of pre-programmed responses, which is a big deal, really.

The output it creates is often quite novel and imaginative. This is what makes it so exciting for things like creative writing, art, and even designing new products, that.

Examples of Generative AI in Action

You've probably seen examples of generative AI even if you didn't call it that. Tools that can write essays or emails for you are forms of generative AI, for instance. They take your prompt and create written text, you know.

Picture-generating tools, where you type a description and it creates an image, are also great examples. You might ask for "a robot walking a dog in a park at sunset," and it will draw that scene, even if it's never seen that exact combination before, so.

Music creation is another area. Some generative AI can compose new songs in various styles, just by being given a few instructions. This shows how it can work with different kinds of data, not just words, more or less.

Even things like making realistic faces of people who don't exist, or designing new molecules for medicine, are uses of generative AI. It's about building something from scratch, based on what it has learned, you know.

So, Is NLP Generative AI? Unpacking the Connection

Now, for the big question: Is NLP generative AI? The simple answer is, not always, but it can be, and often is a part of it, you know. NLP is a wider field focused on helping computers understand and work with human language, so.

Generative AI is a type of AI that creates new things. When that "new thing" is human language – like text or speech – then generative AI is using NLP principles to do its job, to be honest. So, you can think of it like this: all generative AI that deals with language uses NLP, but not all NLP is generative AI, you see.

For example, an NLP system that just analyzes customer reviews to tell if they're positive or negative is doing NLP, but it's not generative. It's not creating new reviews. However, a system that writes a new customer review based on a few keywords *is* generative AI, and it relies heavily on NLP to do that writing, that.

It's a bit like saying "is a car a vehicle?" Yes, but not all vehicles are cars. A bicycle is a vehicle, but not a car. Similarly, language generation is a specific kind of NLP application, but NLP covers much more than just generation, you know.

NLP as a Foundation for Generative AI

Think of NLP as the essential groundwork. Before a generative AI model can write a compelling story, it first needs to understand how sentences are built, what words mean, and how ideas connect. That understanding comes from NLP, so.

Generative AI models that create text, like those that write articles or poems, are built upon deep NLP capabilities. They use NLP to process the vast amounts of text they've learned from, grasping grammar, style, and meaning, you know.

This foundational understanding allows them to then produce text that sounds natural and makes sense to a human reader. Without NLP, generative AI wouldn't be able to handle the complexities of human language, apparently.

It's the very core skill that lets these models not just parrot back what they've seen, but actually construct novel sentences and paragraphs. So, NLP is a bit like the language school that generative AI attends before it can start writing its own books, more or less.

When NLP Becomes Generative

NLP becomes generative when its purpose shifts from just understanding or analyzing language to actually producing it. This is where the lines blur a little, but it's a very important distinction, you know.

Consider a chatbot. An older, rule-based chatbot might use NLP to understand your question and then pick a pre-written answer. That's NLP, but not generative, in a way. A modern, more advanced chatbot, however, will use generative AI to craft a unique, on-the-spot response to your question, that.

This is where the power of modern AI really shines. It's not just recognizing patterns; it's creating new ones. This means it can answer questions it's never seen before, or write text on topics it wasn't specifically programmed for, you know.

So, when you see an AI writing an email, summarizing a document in its own words, or even crafting a new piece of creative writing, that's NLP working in a generative way. It's a very specific and powerful application of NLP, so.

The Role of Large Language Models (LLMs)

Large Language Models, or LLMs, are a big part of why generative AI is so talked about today. These are a type of generative AI model that are particularly good at working with language, you know. They are trained on truly enormous amounts of text data, so.

LLMs are essentially the current pinnacle of generative NLP. They learn the statistical relationships between words and phrases, allowing them to predict the next word in a sentence with incredible accuracy. This ability lets them generate coherent and contextually relevant text, to be honest.

When you interact with tools like some well-known AI assistants, you are likely interacting with an LLM. These models are not just understanding your input (which is NLP), but they are also generating a response that feels natural and helpful (which is generative AI, built on NLP), that.

Their ability to generate human-like text has really changed how we think about AI and language. They are a prime example of how NLP principles are used to create powerful generative capabilities, you know. You can learn more about how these models operate at a fundamental level by exploring resources like Hugging Face's documentation on language modeling, for instance.

Beyond the Basics: Real-World Impact

The combination of NLP and generative AI is changing many parts of our daily lives, often without us even realizing it, you know. From how we search for information to how we create content, their influence is growing, so.

This partnership between understanding language and creating language is opening up possibilities that were once just ideas in science fiction stories. It's a pretty exciting time to watch these technologies develop, to be honest.

They are making tools more helpful and more intuitive for everyday people. This means less time spent on repetitive tasks and more time for creative or complex problem-solving, apparently.

Transforming Communication

Think about how customer service is changing. Many companies now use AI-powered chatbots that can answer questions instantly and even have conversations that feel quite natural, you know. These chatbots use both NLP to understand what you're asking and generative AI to craft their replies, so.

Email writing tools can suggest sentences or even compose entire drafts based on a few keywords. This makes writing faster and often more polished. It's a handy helper for busy people, to be honest.

Even in education, these tools are starting to make a mark. They can help students with writing assignments by providing feedback or generating ideas. They can also help people learn new languages by creating practice conversations, that.

So, the way we talk to computers, and even how we talk to each other through digital means, is getting a big update thanks to these technologies. It's making communication smoother and more efficient, you know.

New Possibilities for Content Creation

For anyone who creates things – writers, artists, marketers – generative AI, powered by NLP, is a very useful new tool. It can help brainstorm ideas, write first drafts, or even create completely new pieces of content, you know.

Imagine needing a quick blog post on a specific topic. A generative AI tool can produce a draft in minutes, saving a lot of time and effort. While it still needs a human touch, it provides a great starting point, so.

In marketing, it can generate different versions of ad copy to see which ones perform best. This kind of quick iteration was much harder before. It helps businesses connect with their audience more effectively, apparently.

For creative writers, it can help overcome writer's block by suggesting plot twists or character dialogue. It's not replacing human creativity, but rather giving it a powerful boost, more or less. Learn more about AI and its applications on our site, and link to this page for more insights.

Frequently Asked Questions

- Is NLP a type of AI?

- Yes, absolutely. Natural Language Processing (NLP) is a specific branch or field within the broader area of artificial intelligence. It focuses on how computers and human language can interact, you know, allowing machines to understand, interpret, and generate human-like text and speech, so.

- What is the main difference between NLP and generative AI?

- The main difference lies in their primary function. NLP is generally about understanding, analyzing, and processing human language, like figuring out what a sentence means or identifying keywords, that. Generative AI, on the other hand, is about creating new, original content, which can include new text, images, or audio, you know. When generative AI creates language, it relies on NLP capabilities to do so, to be honest.

- Can NLP models be generative?

- Yes, they certainly can be. While NLP as a field covers many tasks that are not generative (like sentiment analysis or language translation that doesn't create entirely new text), a significant and growing part of NLP involves generative models. Large Language Models (LLMs) are a prime example of NLP models that are generative, as they produce new text based on prompts and learned patterns, so.

Final Thoughts

So, when we ask, "is NLP generative AI?", the picture becomes clearer: NLP is the big umbrella that covers how computers work with language, and generative AI is a very powerful type of AI that can create new things. When that creation involves language, then generative AI is definitely using NLP to do its magic, you know. It's a relationship of a broader field providing the tools for a specific, very impressive kind of creation, so.

Understanding this distinction helps us appreciate the cleverness behind the AI tools we use every day. These technologies are only going to become more common and more capable, changing how we interact with information and how we create things. It's a pretty fascinating journey to be on, to be honest, watching these developments unfold.